Learning Collaborative Applies QI to Diagnostic Error

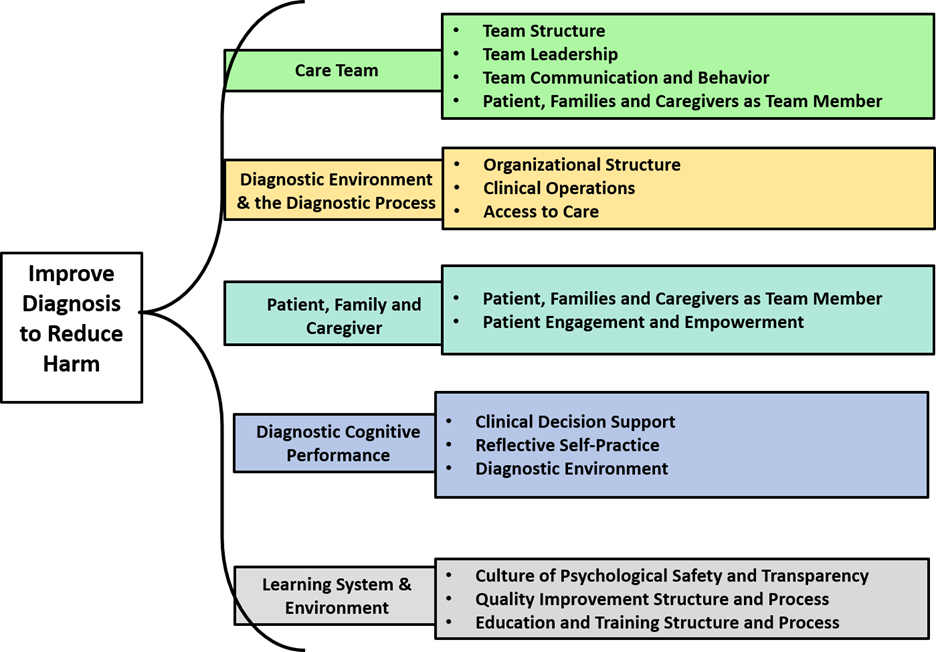

Driver Diagram developed in the SIDM/IHI Learning Collaborative funded by the Gordon and Betty Moore Foundation. Click the image to enlarge.

While quality improvement (QI) techniques have resulted in many changes in health care, using QI to drive improvements in diagnosis is a developing field. In partnership with the Institute for Healthcare Improvement (IHI), the Society to Improve Diagnosis in Medicine (SIDM) brought six leading medical centers together into the SIDM/IHI Learning Collaborative to apply QI to diagnostic quality and safety. With a grant from the Gordon and Betty Moore Foundation, SIDM and IHI helped the centers apply the IHI Breakthrough Series methodology to develop specific interventions designed to reduce diagnostic error.

At MedStar Health in Washington, DC, clinicians utilize the CHEST guideline-based VTE Risk Advisor within Cerner’s MedConnect application. Despite a mandate requiring the use of this tool since 2016, rates of potentially preventable VTE are higher than expected. To improve VTE risk assessment by internal medicine physicians at this large academic medical center, the MedStar team sought to improve clinician understanding of the VTE Risk Advisor risk assessment tool. Concordantly, they implemented small tests of change using a paper-based VTE risk assessment tool among surgeons at a community hospital where the VTE Risk Advisor was not yet in use.

At University of Michigan’s C.S. Mott Children’s Hospital in Ann Arbor, Michigan, the MDM portion of the EHR is the only surrogate that captures the cognitive aspects of decision-making. The Michigan team sought to field test a highly limited scope intervention in the form of a scripted, structured, patient problem-representation template. Their goal was to demonstrate that by structuring the MDM of the pediatric emergency department visit notes in a certain way through a template, the team could potentially influence, affect, or at least better monitor the “in the moment” diagnostic decision-making process of a pediatric emergency department patient encounter.

At Nationwide Children’s in Columbus, Ohio, the team introduced a framework for diagnostic deliberation — the “diagnostic time-out” — during the collaborative in efforts to circumvent cognitive biases that may interfere with medical decision-making. The diagnostic time-out asks the medical team to pause and structures the discussion with two questions: 1) What are the two or three most likely diagnoses for this patient?, and 2) What is at least one life threatening/more severe diagnosis that we must consider for this patient? The team hypothesized that by fostering an environment to support active discussion regarding diagnosis, they would see improvement in the differential diagnoses.

At Northwell Health on Long Island, New York, the team predicted that using “teach-back” routinely during the patient encounter would improve communication and decrease diagnostic error. They developed and deployed PDSA trials to reduce diagnostic errors in ambulatory, emergency, and inpatient clinical settings by focusing on the roles of the patient, family and caregiver. The team effectively carried out enhanced patient communication using a scripted teach-back intervention where providers were asked to explain a diagnosis to a patient and have the patient repeat back what they understood about their diagnosis/diagnoses at the end of the encounter.

At the University of San Francisco in San Francisco, California, the team found they did not have a system for identifying or measuring diagnostic error beyond institution-wide incident reporting and the DHM Case Review Committee, both of which require provider suspicion of error and referral for review. The team needed to characterize the patients impacted by diagnostic error, identify systems failures contributing to diagnostic error, and examine the impact of diagnostic error on existing quality metrics. To do so, the team tested the following change ideas: 1) develop automated triggers to detect cases at high risk of diagnostic error, 2) complete two-person SAFR-Dx/Deer Taxonomy review of triggered cases, 3) develop infrastructure for provider feedback through a) automated programs that notify providers of patient readmission or death, and b) direct feedback on outcome of two-person review.

At Tufts Medical Center in Boston, Massachusetts, the team focused on testing the Outpatient Radiology Results Notification Engine. The Results Engine is designed to send notifications related to outpatient orders and results to Tufts Medical Center physicians who place these orders. This is a potentially generalizable approach for identifying missed and delayed test results, even in health systems employing multiple EMRs. The workflow starts when a radiology order is placed for a patient and an appointment scheduled. The Results Engine sets a timer to expire 14 days from the time/date of the outpatient test appointment.

The Collaborative encountered a few key challenges and successes in the process of conducting quality improvement for diagnosis. The teams mapped their interventions to the diagnostic error reduction theory of change in the form of a driver diagram with primary and secondary drivers. These observations suggest that the drivers are generalizable across clinical settings and that the driver diagram is a solid tool to reduce the risk of diagnostic error. The six participating organizations used the driver diagram to identify areas to decrease diagnostic error and address those areas in an actionable and tactical way. Additionally, sites found that conducting quality improvement using IHI’s methodology in conjunction with rapid PDSA cycles was an effective way to implement the interventions identified in the driver diagram and reduce diagnostic error. Time was intentionally devoted to this approach during the learning sessions because although the hypothesis is the same, the quality improvement methodology requires a paradigm shift from the traditional research study design methodology. Although both sciences, research and improvement science, aim to improve the quality of care, collecting, using, and evaluating data using quality improvement methods is distinctly different.

Despite the successes, there was one limitation that could not be overcome. The original goal was to develop a global outcome measure across all six pilot sites. But given the complexity of the diagnostic process and the diverse interventions, each team developed their own set of measures without significant overlap. This became especially challenging for the improvement teams in this Collaborative because an “apples-to-apples” comparison was not possible and therefore could not be used to validate many findings.

For more information on the SIDM/IHI Learning Collaborative or to learn more about a specific project described above, please visit the Driving Quality Improvement Efforts page on the SIDM website or reach out to Diana Rusz, SIDM QI Program Manager, at Diana.Rusz@Improvediagnosis.org.

Driving Quality Improvement Efforts

Learn more about new tools and techniques to improve diagnosis.